Catalysts for Scaling the Intelligent Edge

Combining 5G connectivity with intelligent devices at the edge of the network is creating a framework for building immersive and impactful business solutions. These solutions will transform all types of industries, from agriculture and energy to cities and airports.

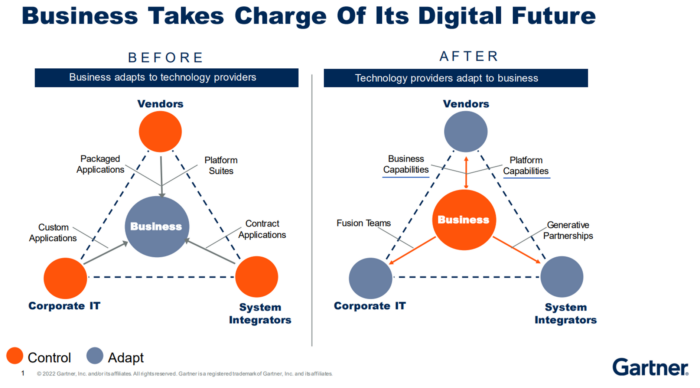

For the intelligent edge to reach its full potential, solution providers will need to deliver AI, IoT, computer vision, robots, and connectivity services—at scale. To serve the needs of all businesses, from SMB to enterprise, these solutions must be tailored to specific customer requirements and desired outcomes—by matching customer intent with provider capabilities. This requires a paradigm shift in the way we compose and deliver solutions to adapt to business needs rather than business adapting to “one-size-fits-all” packaged applications—allowing businesses to control their digital future.

This year at TM Forum, innovative Catalyst projects are collectively reshaping how technology providers offer value to customers. As an ecosystem enabler, CloudBlue is helping to guide the evolution of a unifying architecture to support everything-as-a-service (XaaS) marketplaces, offering interoperable, intelligent edge-to-cloud services that incorporate information, capability, and intent models adopted by TM Forum.

Taming Complexity through Interoperable Services

A key challenge to digital transformation is the ability to enable end-to-end interoperability across different industries, each having its own environments and interdependent use cases. The Open Group Architecture Framework (TOGAF) defines interoperability as the ability to provide services to and accept services from other systems, and to use the services exchanged to enable them to operate effectively together.

At the core, these services must provide seamless information exchange between systems and enable them to work effectively together. Yet today’s IoT and business systems incorporate disparate, domain-specific semantic models and data communication protocols that limit their extensibility and flexibility across domains, resulting in complex and costly system integrations.

A unifying interoperability standard is critical to minimize the risk, cost, and time involved with implementing these complex systems and to accelerate global adoption of intelligent edge-to-cloud services. This standard needs to be simple, universally adopted, and sustainable. Adopting a unifying model for interoperability will enable intelligent services to form a system of smart systems that deliver high-value outcomes, adding significant economic value.

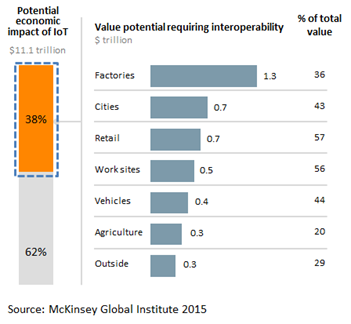

A 2015 McKinsey report estimates that interoperability between IoT systems is critically important to capturing maximum value, with 40 percent or $4 trillion in value potential requiring interoperability.

Similarly, a 2010 report from IBM estimated that $4 trillion of wasted global GDP is preventable by eliminating the inefficiencies among interdependent systems. The challenges we face today cannot be solved by continuing to optimize at the enterprise or domain level—they will only be solved by incentivizing data sharing across an entire solution provider ecosystem able to deliver smart systems that seamlessly interoperate within and across domains.

Evolving a Universal Metamodel for System Interoperability

A standard, universal way to manage system connections, distribute state changes, and orchestrate services can enable a natively digital world, bridging the continuum from cloud to sensor and eliminating the need for costly and complex custom system integrations.

When all systems share a common metamodel for encapsulating service behaviors, capabilities, and purpose, they become inherently interoperable. Yet trying to define a universal metamodel for interoperability that can be simply applied to all system models has its challenges. Namely, it requires a high degree of abstract thinking.

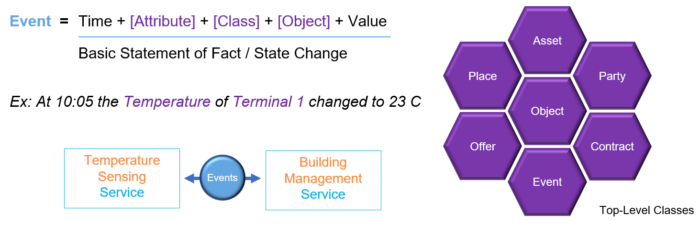

While it is likely impossible to anticipate every concept that may be represented within all systems, a top-level ontology (TLO) can define a standardized set of “primitive” concepts applicable to all systems. Each concept (or class) can represent a category of like things or objects (e.g., asset) which can be uniquely identified. A hierarchy of subclasses (e.g., physical asset) can create any of the more specific concepts that may be needed for any given industry or environment. A class or subclass is defined to reflect the attributes, restrictions, and relationships unique to its objects (or instances).

Promising work on a unifying TLO is evolving from collaborating members of multiple industry consortia, including the Digital Twin Consortium, Industry IoT Consortium, TM Forum, and Airport Council International. The TLO describes the relationship between several metamodel concepts, starting with the core concepts of “class” and “attribute.” It also describes the relationship between several top-level classes (e.g., asset, party and place) that are intended to support cross-industry commercial ecosystems composed of dynamic “systems of systems.”

Transitioning from Features and Technologies to Capabilities

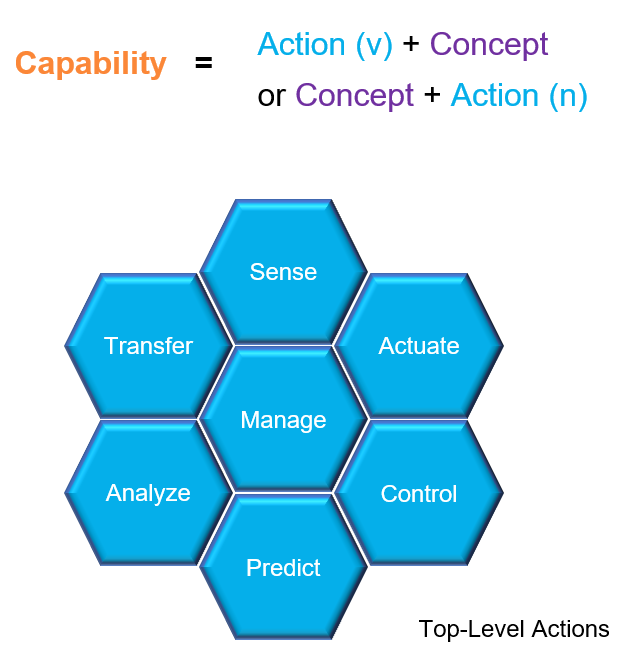

The concept of capability can be defined as “the ability to do something.” Although simple, it is a powerful concept, as it can be used to provide an abstract, high-level view of a product, system, or organization, offering new ways of dealing with complexity. It has been widely adopted in many areas, including in system engineering, where capabilities are seen as a core concept. The concept is considered particularly relevant for the engineering of complex systems-of-systems, which relies on the combination of different systems for achieving a particular emergent capability.

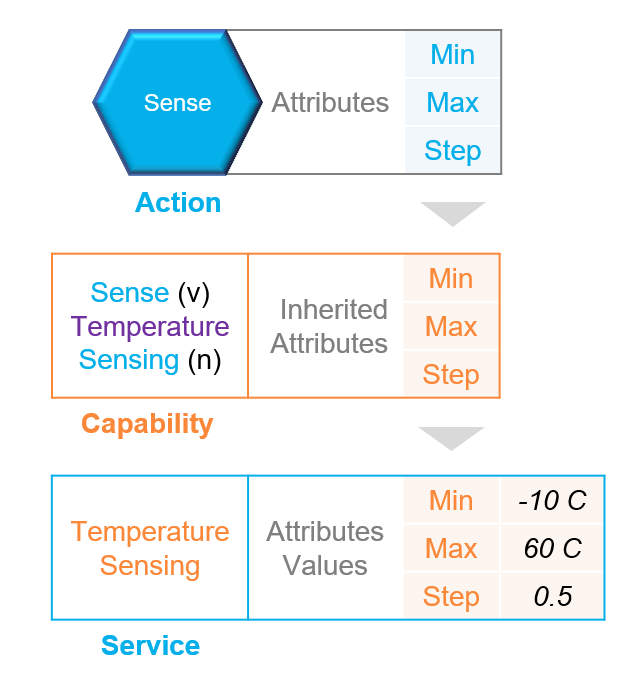

A capability can be defined by associating an action (e.g., sense or sensing) to a concept (e.g., temperature). Beyond a set of top-level actions, a class hierarchy can create any of the more specific actions that may be needed for any given industry or environment.

A sensing capability (provided by a sensor) offers the ability to sense an aspect of the physical world in the form of measurement data. Information from sensor observations may be provided to other systems via an information transfer capability, allowing other systems to use their capabilities to manage and analyze the information. An actuation capability, provided by an actuator, offers the ability to actuate a change in the physical world as directed by a control capability.

Rather than implementing technologies like IoT and 5G as costly and long-term projects, technologies can be incorporated into standardized capabilities and delivered efficiently as multi-vendor, intelligent edge-to-cloud services. As defined in the OASIS Reference Model, a service can be considered an access mechanism to a capability, but a service can also be “described” based on its capability.

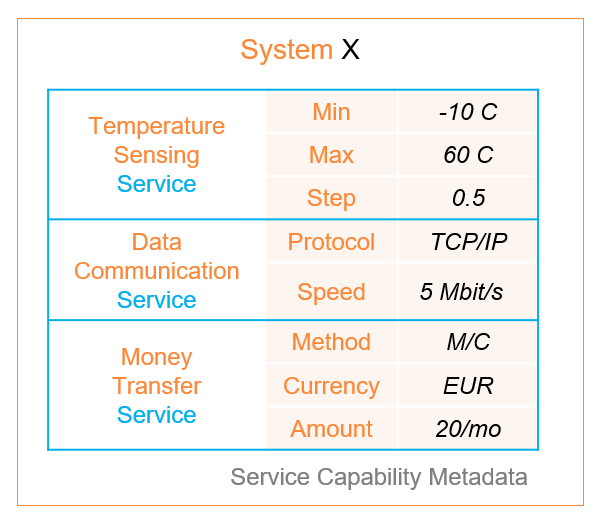

As a type of class, each action comprises a unique set of attributes (aligning to the Action concept of Schema.org). For example, a sense action can comprise a value range (minimum, maximum) and precision (step). A capability (e.g., sense temperature) inherits the attributes of its action. A service can be described by applying specific values to the inherited attributes of its capability. For example, a temperature sensing service may have a sensing range from -10 to 60 degrees Celsius, with a precision of 0.5 degrees. These “features” and limitations can be described using a “model” for a sensing capability.

When these capabilities are defined and modeled by a standards organization, every service based on these standard capabilities become inherently interoperable and interchangeable. Capabilities can be standardized for managing the state of any type of entity, including physical assets such as tractors and farms, as well as digital commerce entities such as orders, subscriptions, and usage. The TM Forum, for example, has defined a standard open API for managing each entity type related to commerce as part of its Open Digital Architecture.

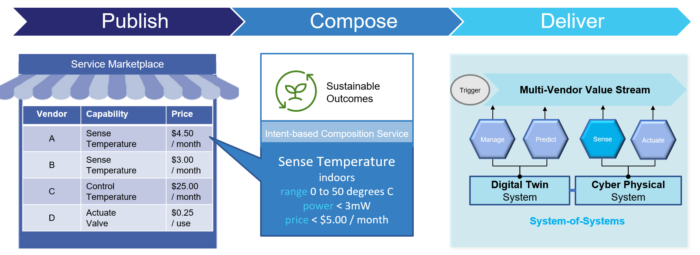

These standardized capabilities can be offered as services by multiple providers and allow an end-customer to compose an interoperable system of systems comprising services tailored to its specific requirements. Gartner predicts that these types of granular capabilities will be instrumental in how multi-vendor solutions are composed. They will enable end-customers to select the best vendor for each required capability, rather than a single vendor for all capabilities.

To evolve this solution delivery paradigm shift, SDOs and consortia must adopt models for foundational capabilities supporting the TLO, including management of the metamodel concepts and top-level classes (e.g., class management, capability management).

System of Systems Approach to Composing Complex Solutions

A system can comprise multiple capabilities, and each capability can be offered as a service to other systems in exchange for some value. In ArchiMate, a service is defined as a unit of functionality (capability) that a system exposes to its environment, while hiding internal operations, which provides a certain value.

In a natively digital world, systems will dynamically connect and interact in real-time, forming and re-forming interdependent and interoperable systems of systems. Each “constituent” system is “independently operable” but can be dynamically connected when needed to achieve a certain higher goal. Complex systems-of-systems rely on the combination of different systems for achieving an emergent capability.

These systems need the ability to connect based on current context and understanding of their own capabilities

This requires a “matchmaking” mechanism enabling both information and value exchange that does not rely on prior knowledge. By using a universal metamodel for system interoperability, all capability-based services can be described through a system’s metadata, which can be shared and understood by other systems. These services can include primary capabilities (e.g., temperature sensing) as well as supporting capabilities (e.g., data communication and money transfer), enabling both information and value exchange.

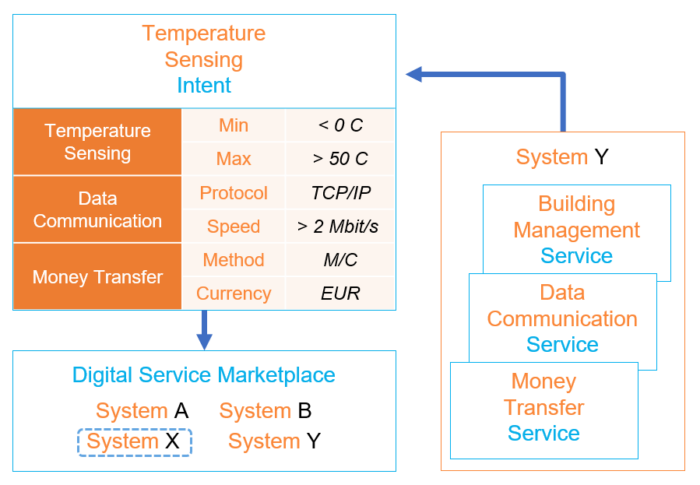

Complementary to the concept of capability is the concept of intent which specifies what a system, or the party it represents, wants to achieve. Intent allows a system to express requirements to other systems that may be capable of fulfilling those requirements. For example, a building management system (System Y) may have a requirement for a temperature sensing capability offered as a service by another system.

System Y can express its requirement as an intent, which includes the values and value ranges of the primary and supporting capabilities it requires. A matchmaking (or composition) service can identify a participating system (System X) within a network (or marketplace) with capabilities that best match the requirements.

Systems must be able to express requirements in a way that recognizes capabilities but does not involve details on how those capabilities are realized, enabling constituent systems to understand and act on them. It must allow dynamic changes in the requirements and separate the required capabilities from their realization.

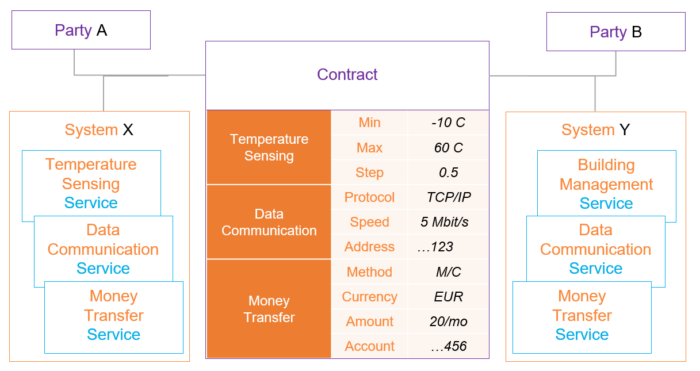

When a matchmaking service initiates a connection between two systems, metadata from each system, including service attributes, can be shared to define a contract. The contract between the connected systems, and their responsible parties, specifies the rights and obligations associated with services of each system and establishes parameters for interaction.

A multi-level system of systems, based on this universal metamodel for interoperability, can enable frictionless and dynamic interactions and information sharing for optimal decision-making and actions that drive high-value outcomes for end customers.

An agile, intelligent business, like a smart airport, can be composed from a marketplace of multi-vendor capabilities (e.g., sense, control and actuate) that are offered and delivered as services. These services can be intelligently selected based on the specific characteristics of their capabilities to drive high-value, sustainable outcomes. When delivered, these multi-vendor services can compose value streams that can span edge to cloud systems.

Real-time Insights and Reactions through Event Sharing

Given the massive amounts of data from IoT and the requirements for real-time communication flows, it’s clear that client-server, request-response architecture can no longer keep up with the necessary volume, latency, reliability, and security challenges.

Instead, systems can be composed of event-driven services that allow for real-time communication, enabling information to be exchanged in the form of events. These events reflect object state changes that can be synchronized between systems.

Today, event data from smart products and automation systems is currently stored and communicated in many different formats and lacks semantic information to provide adequate context. Without context, a time-consuming normalization effort is required before that data can be utilized by advanced systems to effectively generate value.

To be universally understood, contextual events should be coupled to a clearly defined, standardized, and federated ontology for distributed systems. A universal event format can provide a “lowest common denominator” for distribution of system and object state changes.

Each event within a universal event format can represent a state change of a single attribute, which enables one event format to be utilized across any ontology class. The schema can include traditional time series elements (time and value) and metadata identifiers for ontology elements (attribute, class, and object) as shown below.

A universal event format coupled to a common ontology can effectively support the semantic heterogeneity of events in large and open implementations such as supply chains, airports, and cities. It provides event consumers with the minimal information necessary to react to any state change occurrence. This design pattern can support an overall architecture that is simple, scalable, and sustainable.

Digital Twins and Simulations for Optimizing Outcomes

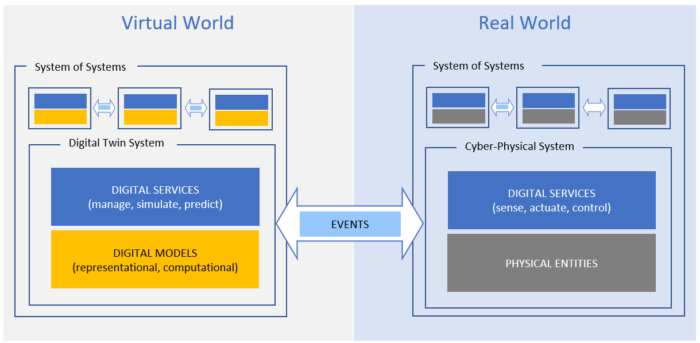

In the evolution of the industrial metaverse, the virtual and physical worlds will seamlessly interact to optimize business operations and deliver immersive customer experiences.

Together with digital twins, all contextualized event data can be aggregated and expanded to enable total simulation of the system of systems that represent an entire virtual environment. This allows end customers to visualize and test how individual parts of complex systems work together and can eliminate risks prior to physical system implementations. Businesses will greatly benefit from a holistic visual representation to effectively manage day-to-day operations as well as envision future scenarios to optimize processes.

As an executable virtual representation of the physical system, the digital twin system consumes learnings and experiences from real-world processes to update the digital twin model, intelligently connecting it to the cyber-physical system in real time. Properly abstracted, a universal TLO and common event-driven services can support real-time information exchange between interoperable digital twin systems in a virtual world, cyber-physical systems in the real world, and distributed edge-to-cloud systems bridging both worlds.

This approach to solution design will enable smart product OEMs and solution providers to differentiate through intelligent services built on a foundational layer of semantic interoperability. When integrated with intelligent services, these interoperating systems have the potential to create optimized outcomes for businesses and society.

Part 2 will apply this approach to compose a smart airport from a service marketplace enabling immersive traveler experiences and precision operations.